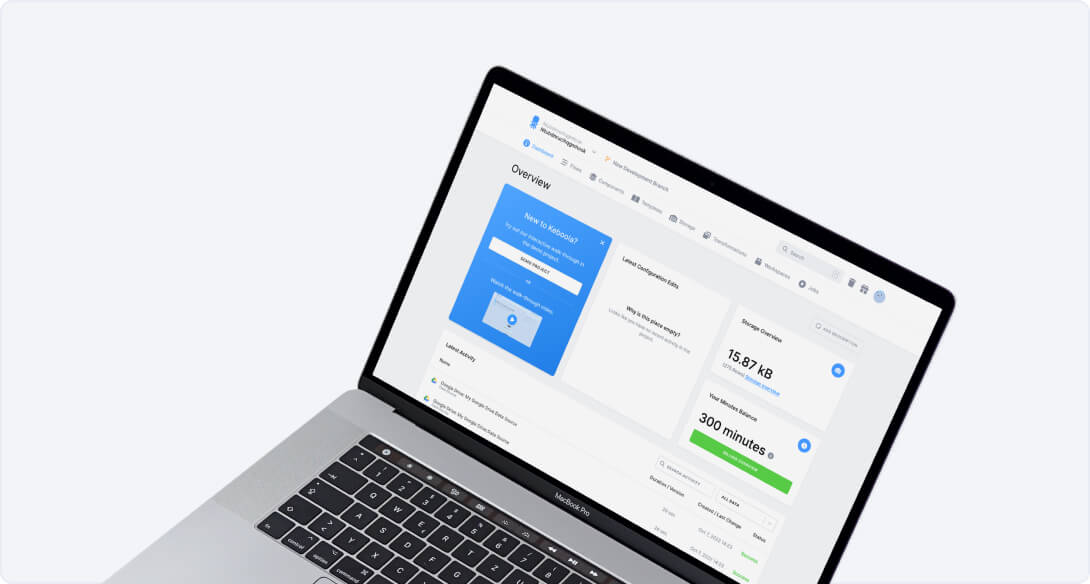

Run your data operations on a single, unified platform.

- Easy setup, no data storage required

- Free forever for core features

- Simple expansion with additional credits

Thank you! Your submission has been received!

Oops! Something went wrong while submitting the form.

Request a Demo

Conditional Flows Now Available!

Workflows That Think & Save You 75% on Cloud Bill

Build Reliable Data Pipelines with Keboola Conditional Flows.

.webp)

Source → Transform → Ship

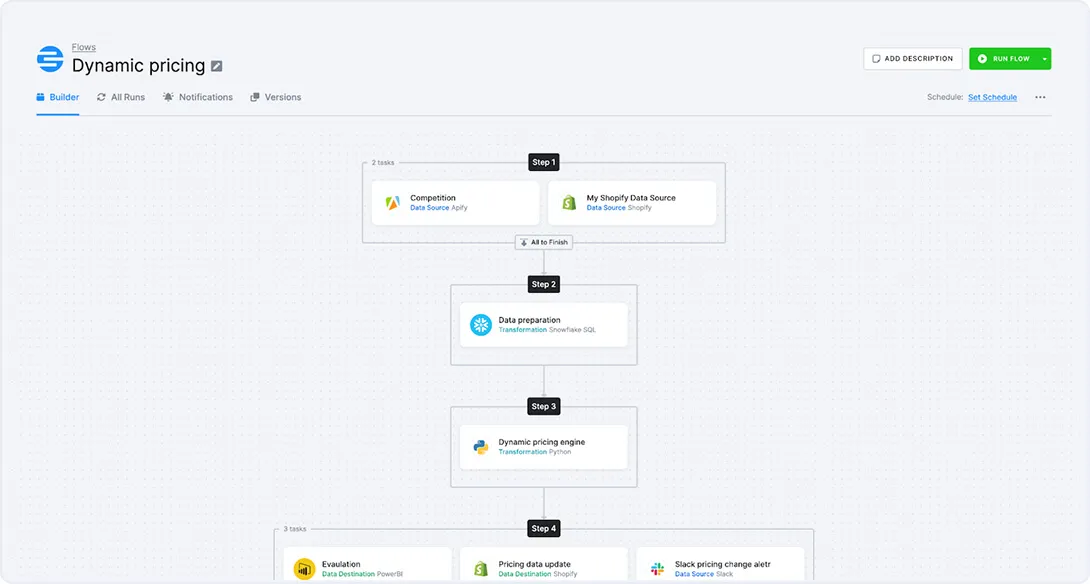

Build Flows with AI, UI, or Code

You can start with a blank canvas, reuse existing flows, or prompt AI agents to scaffold your logic.

Consolidate, Build, and Deploy – All in One

What sets Keboola's Flows apart from other orchestration solution

Visual Flow Builder

Design complex conditional logic with an intuitive GUI. Create branches, loops, and parallel paths with clicks and drags - no JSON, Python, or YAML configurations required.

All-in-One Platform

Keboola can replace up to 50% of your SaaS data tools - freeing your engineers to focus on high-impact projects, not endless automations.

Connect Anything

Flows integrate with everything: databases, SaaS apps, files, APIs, and business processes. Trigger Slack alerts, update CRMs, or call any API - all within conditional branches.

Granular Logic Control

Skip unnecessary compute, run tasks only when needed, and parallelize intelligently. Save significant cloud costs by avoiding wasted processing with dynamic execution paths.

AI-Powered

Choose the work with Keboola that fits your vibe. Do you prefer AI agents, IDE, code editors, visual interfaces or via API? We've got you covered with Cursor, Claude, Windsurf or CLI.

Source → Transform → Destination

Build Smart, Reliable Data Pipelines with Keboola Flow Builder

All the data integrations you’ll ever need in one place

FAQs

What exactly does “conditional” mean in Conditional Flows?

It means your data pipeline can make decisions based on real-time conditions. For example: “If a file is missing, skip the step”, or “If a data quality check fails, send a notification and stop”. No more static pipelines – you now have logic-driven, adaptable workflows.

Why would I need conditional logic in a data pipeline?

Because real-world data is messy and unpredictable. APIs fail. Data arrives late. Tasks run long. With Conditional Flows, your pipeline adapts instead of breaking. You can auto-retry, reroute, skip steps, or stop jobs to avoid costly failures and delays.

Can Conditional Flows help reduce compute costs?

Yes. You can avoid running unnecessary tasks (e.g. “skip if no new data”) or kill long-running jobs before they rack up charges. Customers have reported up to 75% reduction in wasteful executions using this feature.

How do I create a Conditional Flow – do I need to code it?

No coding required. The Keboola UI lets you build logic visually or configure it via JSON if you prefer code. You define conditions, and then actions (retry, skip, notify, kill, etc.) tied to those conditions. It’s easy to test and adjust as you go.

What kinds of conditions can I use?

You can base conditions on:

- Task results (success, failure, error)

- Data checks (row count, null values, test results)

- External triggers (webhooks, variable states)

- Runtime behavior (duration, system variables like day of week)

Conditions support logic like AND, OR, NOT for complex scenarios.

Can I use Conditional Flows for error handling and retries?

Yes – this is one of its core benefits. You can define fallback actions:

- Retry the task up to X times

- Reroute to a backup extractor

- Notify a Slack channel and continue

- Stop the pipeline to prevent bad data

This self-healing behavior dramatically reduces manual oversight.

Can I set different logic depending on when or how the pipeline runs?

Yes. Conditional Flows can use system variables like dates, runtime conditions, or webhook signals.

For example:“If today is Saturday, skip campaign updates”

“If triggered by alert webhook, run additional validation step”

What’s the business impact of using Conditional Flows?

📉 Fewer failures = higher reliability

🔄 Automated error handling = reduced downtime

💰 Smart branching = less cloud spend

🧠 Smarter workflows = more scalable data ops

Your team saves time, money, and builds resilience into the pipeline.

Can Conditional Flows replace orchestration tools like Airflow?

Absolutely Yes, Conditional Flows offer robust task orchestration natively in Keboola, eliminating the need for external tools like Airflow, Dagster etc...

Is this only useful for large companies or complex data teams?

Not at all. Whether you’re a solo analyst or a large data team, Conditional Flows simplify everyday problems—like retrying failed tasks, skipping empty loads, or sending alerts when something breaks.